Redefining your ITSM Strategy in the Age of AI Agents

Most IT teams are adding AI into their service stack, but few are ready for what that actually means.

A chatbot that answers FAQs or routes tickets isn’t “agent enablement.” It’s interface decoration. True agentic transformation affects how your systems think, act, escalate, and learn. It’s about workflows that machines can interpret, knowledge bases they can reason through, and decisions they can execute safely, on your behalf.

Done poorly, agent adoption leads to brittle automations, unresolved edge cases, and shadow workflows that leave humans cleaning up. Done well, it becomes a force multiplier: reducing backlog, increasing trust, and accelerating issue resolution with minimal intervention.

This guide offers a structured, four-phase adoption model, from initial assessment to scalable, multi-agent orchestration.

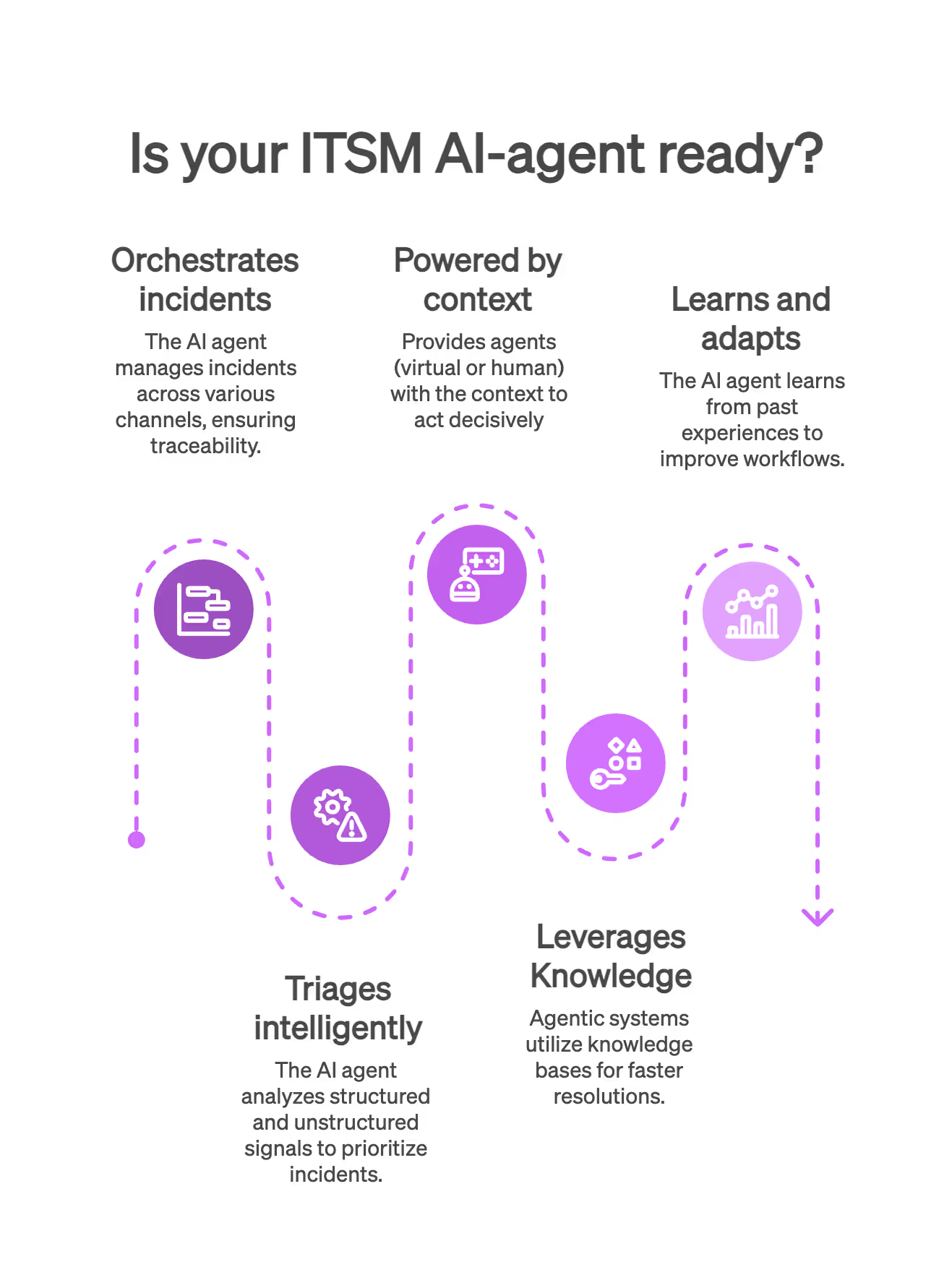

What does “AI Agent-Ready” mean?

An “AI agent-ready” ITSM environment is not simply one that has agents (virtual or human) in place. It is one where AI agents can operate effectively, responsibly, and in alignment with broader service goals. It presumes semantic data structures, consistent taxonomy, readable logs, and above all, mature knowledge management practices.

Agent-readiness is achieved when a system can:

- Orchestrate incidents across channels with clarity and traceability

- Triage intelligently based on both structured and unstructured signals

- Provide agents (virtual or human) with the context to act decisively

- Leverage knowledge as a first-class citizen in resolution flows

- Learn from past service experiences and adapt workflows accordingly

Let us now examine how to reach such a state through a deliberate, phased approach.

Phase 1: Assessment & readiness

The starting point is an honest baseline assessment. This includes more than a tool audit or a ticket analysis.

It means examining whether your:

- Current workflows are intelligible to machines

- Metadata is consistent

- Agents have the authority to act when automation fails

Key activities in this phase often involve mapping existing workflows, clarifying decision nodes, and aligning stakeholders across IT operations, HR, compliance, and service desk teams. At this stage, maturity models such as the ITIL 4 Service Value System or the Capability Maturity Model Integration (CMMI) can help reveal systemic blind spots.

Common pitfalls:

- Overemphasis on tooling without cultural alignment.

- Neglecting documentation debt and knowledge fragmentation.

- Underestimating how hard it is for junior agents (or bots) to parse ambiguous categories or legacy tags.

Phase 2: Foundation & automation

Once gaps are clear, the next step is to establish a foundation of structured automation and intelligent routing. This is where service catalogues, approval matrices, and change enablement protocols are codified. The workflows must become transparent, semantically valid, and auditable.

Automation at this stage should focus on high-volume, low-variability tasks - such as password resets, equipment provisioning, and routine change requests. Where appropriate, virtual agents can assist, but they remain on a short leash. Human agents retain control.

Knowledge management becomes foundational here. Every automation node must have a fallback path to documented procedures or decision trees. Agent deflection only works when there’s a knowledge asset to deflect to.

Common pitfalls:

- Implementing robotic process automation (RPA) without integrating knowledge articles.

- Creating automation silos that operate outside change management.

- Assuming users will self-serve without being shown how.

Phase 3: Agent enablement & AI integration

Yet before introducing agents, IT leaders must understand that not all agents operate at the same level of sophistication. Most current implementations remain at the lower end of what we might call the spectrum of agency.

At the base are systems with no real agency - those that trigger workflows or respond with predefined answers based on trained data. Slightly higher are routing models that classify inputs and direct tickets accordingly. Beyond that lie tool-using agents that can interact with APIs or systems autonomously, and at the farthest end, multi-agent systems that coordinate with one another to execute complex, multi-step tasks across domains.

Introducing even a moderately agentic system requires deliberate preparation. Workflows must be legible to machines. Metadata must be reliable. Failure paths must be defined with clarity. An AI agent cannot guess its way through a broken taxonomy or infer context from scattered documentation. It needs well-structured tickets, consistent category logic, and a semantic knowledge base that embeds reasoning, not just answers.

This is also the phase where knowledge management transitions from supportive to strategic. Articles are no longer written only for human readers - they are ingested, interpreted, and operationalised by agents. Their structure, language, and versioning now directly impact the agent’s ability to act effectively. Every ambiguity in phrasing, every missing context, becomes a potential error in execution.

Change management also becomes non-negotiable. Not only must agent interventions be logged and reversible, but CAB (Change Advisory Board) processes must expand to consider AI-initiated actions. An agent that automatically remediates an issue or triggers provisioning must be governed under the same controls as a human administrator. This requires alignment between service desk, IT operations, compliance, and platform governance teams.

Common pitfalls:

- Overconfidence in large language models without sufficient grounding.

- Failing to design safe handoffs between AI and humans.

- Relying on non-versioned knowledge sources, which results in hallucinated answers and degraded trust.

Phase 4: Optimisation & maturity scaling (Optional but Strategic)

For organisations ready to go beyond functional AI usage, the final phase involves refining the AI-human symbiosis. This includes continuous tuning of prompt engineering, feedback loops, and trust calibration.

Service Experience Maturity Models, especially those that account for omnichannel journeys, multilingual support, and predictive analytics, come into play. Your AI agents should now participate in retrospective analyses, contributing to service reviews and knowledge updates.

This phase also calls for advanced knowledge governance. Metadata policies, taxonomies, article lifecycle rules, and SME review processes must be enforced systematically. Without them, AI performance plateaus or backslides.

Common pitfalls:

- Treating optimisation as an afterthought.

- Letting knowledge decay reintroduce inefficiencies.

- Assuming that agent performance improves linearly with usage.

Final thoughts

Organisations that prepare now through methodical assessments, structured automation, and responsible agent enablement will be best placed to thrive as ITSM becomes increasingly agentic. The point is not to replace people but to make sure that both human and virtual agents can do what they do best, without obstruction.

In the words of a senior ITSM advisor I once worked with, “An AI agent is only as good as the ecosystem it inhabits.” That ecosystem must be semantic, traceable, learnable, and above all - governed.

The recommendation is straightforward: before implementing agents, optimise the stage. Examine your workflows, invest in knowledge management, clean up your service catalogue, and design for explainability. Then, and only then, introduce agents who can navigate it.

You may also like...